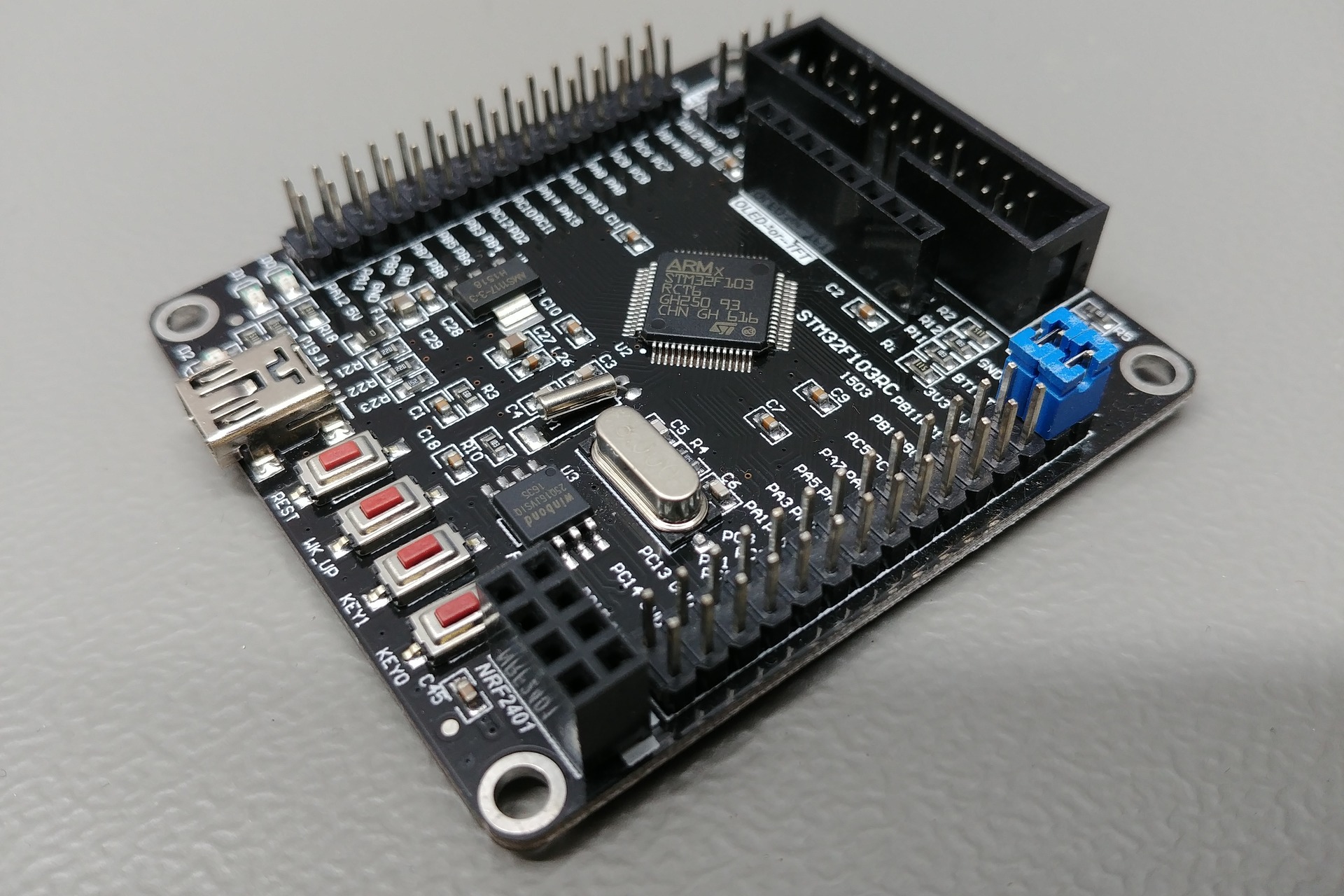

How to implement a multi class neural network with STM32F103?

Global electronic component supplier AMPHEO PTY LTD: Rich inventory for one-stop shopping. Inquire easily, and receive fast, customized solutions and quotes.

Implementing a multi-class neural network on an STM32F103 (Cortex-M3, 72 MHz, no FPU, limited RAM/Flash) is challenging due to its limited resources, but it is possible for small networks and low-dimensional inputs (e.g., 2D/3D sensor inputs).

Here's how you can do it step-by-step:

1. Design a Small Neural Network on PC

Use Python with TensorFlow/Keras to design and train a model:

2. Convert Model to C (Quantized if Needed)

Options:

-

STM32Cube.AI (official ST tool)

-

Converts Keras/TensorFlow models to C code optimized for STM32

-

Integrates with STM32CubeMX

-

-

uTensor, CMSIS-NN, or TensorFlow Lite for Microcontrollers (TFLM)

-

Lightweight inference engines for ARM Cortex-M cores

-

On STM32F103, use integer quantization (8-bit) to reduce memory and increase speed:

3. Integrate with STM32 Project

A. Using STM32Cube.AI:

-

Install STM32CubeMX + STM32Cube.AI plugin

-

Import

.h5model -

Enable X-CUBE-AI middleware

-

Generate code

-

Use

aiRun()to pass input data and get output class

B. Manual (for tiny models):

If doing it manually:

-

Extract weights and biases

-

Implement forward pass in C:

4. Run and Test

-

Flash to STM32 using STM32CubeIDE

-

Send input via UART or use ADC/sensors

-

Print the predicted class

Optimization Tips

-

Use fixed-point arithmetic (Q7, Q15 types)

-

Use CMSIS-DSP for matrix operations

-

Use CMSIS-NN if available — optimized for Cortex-M

-

Keep model size tiny: e.g., 2–1–3 or 2–4–3 architecture

Resources

Related Articles

- ·Comparison of ARM vs. RISC-V MCUs

- ·How to achieve serial communication between STM32 and ESP8266?

- ·DS18B20 Temperature Sensor Detailed Explanation and Use Cases

- ·How to deploy artificial intelligence algorithms on STM32?

- ·The Difference Between 8-bit, 16-bit, 32-bit, And 64-bit Microcontrollers

- ·STM32 PWM Principle and Application

- ·How to use STM32 as a logic analyzer?

- ·How to Create a PCB for STM32?

- ·The difference between HEX and BIN files in microcontrollers