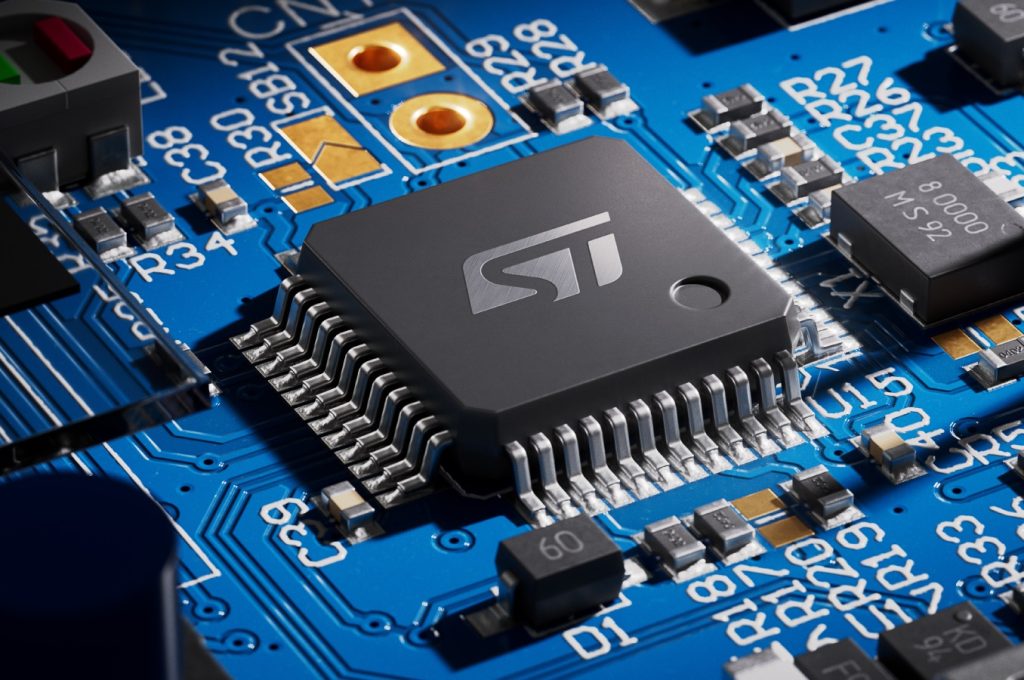

How to deploy artificial intelligence algorithms on STM32?

May 23 2025

Inquiry

Global electronic component supplier AMPHEO PTY LTD: Rich inventory for one-stop shopping. Inquire easily, and receive fast, customized solutions and quotes.

QUICK RFQ

ADD TO RFQ LIST

Deploying artificial intelligence (AI) algorithms on STM32 microcontrollers involves converting trained models into optimized, efficient code that can run on the constrained resources of STM32 devices (limited memory, CPU power, and energy).

Related Articles

- ·What are the differences between popular MCU families (e.g., ARM Cortex-M, AVR, PIC, ESP32)?

- ·STM32 four precision control methods for stepper motors

- ·What is the lowest power STM32 MCU? how to choose?

- ·Blue Pill vs Black Pill: What’s the Difference and How to Choose?

- ·Why can STM32 stand out from many 32-bit microcontrollers?

- ·Why can Arm chips change today's world?

- ·How to distinguish fake chips?

- ·How do I secure an MCU from hacking or tampering?

- ·The best MCUs/MPUs for industrial humanoid robots

- ·What are the advantages and disadvantages of using SoCs in embedded systems?

Populer Posts

TMS320C6414TBGLZ1

Texas Instruments

SAF775CHV/N208QY

NXP Semiconductors

ADSP-BF548MBBCZ-5M

Analog Devices Inc.

DSPIC33CK512MP406-E/MR

Microchip Technology

ADSP-2187LBST-160

Analog Devices Inc.

SAA7706H/N210,557

NXP Semiconductors

X66AK2G01ZBB60

Texas Instruments

SAF7751HV/N208W/VK

NXP Semiconductors

TMS320DM6431ZDU3

Texas Instruments

TMS320DM8167SCYG4

Texas Instruments

MSC8122MP8000

NXP Semiconductors

R4S76410D100BGV

Renesas Electronics Corporation